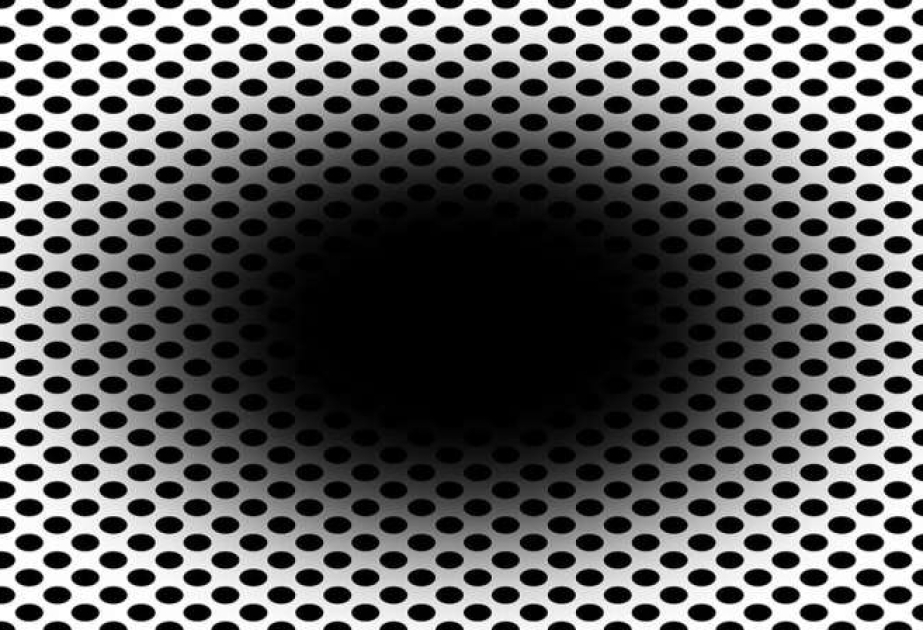

Our brain and eyes can play tricks on us—not least when it comes to the expanding hole illusion. A new computational model developed by Flinders University experts helps to explain how cells in the human retina make us "see" the dark central region of a black hole graphic expand outwards, according to Medical Xpress.

In a new article posted to the arXiv preprint server, the Flinders University experts highlight the role of the eye's retinal ganglion cells in processing contrast and motion perception—and how messages from the cerebral cortex then give the beholder an impression of a moving or "expanding hole." "Visual illusions provide valuable insights into the mechanisms of human vision, revealing how the brain interprets complex stimuli," says Dr. Nasim Nematzadeh, from the College of Science and Engineering at Flinders University. "Our model suggests that the expanding hole illusion is caused by the interactions between the retina processing contrast and motion perception and the brain. It shows how retinal dynamics shape perception can lead to motion detection after we initially perceive a complex static image. "This research advances our understanding of contrast sensitivity and spatial filtering in visual perception, providing new directions for computational vision and neuroscience."

Emeritus Professor David Powers, who has worked for decades in visual, auditory, speech and language processing, says the latest study on illusions "gives unique insights into neural processing". "This retinal 'Difference of Gaussians'-based 'bioplausible' model can enhance AI-driven vision systems by improving how machines detect edges, textures, and motion—key elements in object recognition," says Professor Powers. "AI models based on this low-level cosine Gaussian model tend to be more efficient and match human behavior/cognition better. They tend to give more human-like vision results, as demonstrated by their ability to explain illusions—or be fooled by illusions." As a result, Flinders researchers have been able to model human retinal processing and produce these common illusory effects that humans see. "Understanding this helps us do better than human-level AI-vision by understanding what can go wrong and why," adds Professor Powers.

Such in-depth modeling could, in turn, enhance future applications for more plausible artificial intelligence that better matches human behavior/cognition—as well as advanced deep learning architectures, researchers say. Unlike traditional edge-detection filters, the model replicates biological contrast sensitivity, making AI vision systems more robust in real-world conditions such as low-light environments or cluttered backgrounds. This has applications in medical imaging, security surveillance, and advanced deep learning architectures for more human-like perception. For example, in medical imaging, health care and vision disorders, it could be used for early detection of glaucoma, macular degeneration, and diabetic retinopathy by modeling retinal processing abnormalities.

In medical image processing, it could be used to improve the clarity of MRI, CT, and X-ray scans by mimicking the human visual system's edge detection. And also for prosthetic vision by assisting in developing bionic eyes by optimizing how artificial vision systems process images for blind patients. The new model could have applications in aerospace and defense where rapid and accurate object detection is critical to enhancing target and attack recognition in fast-moving scenarios, such as drones identifying objects mid-flight or pilots detecting threats in complex visual fields.